AI-Enabled Secure and Distributed Additive Manufecturing

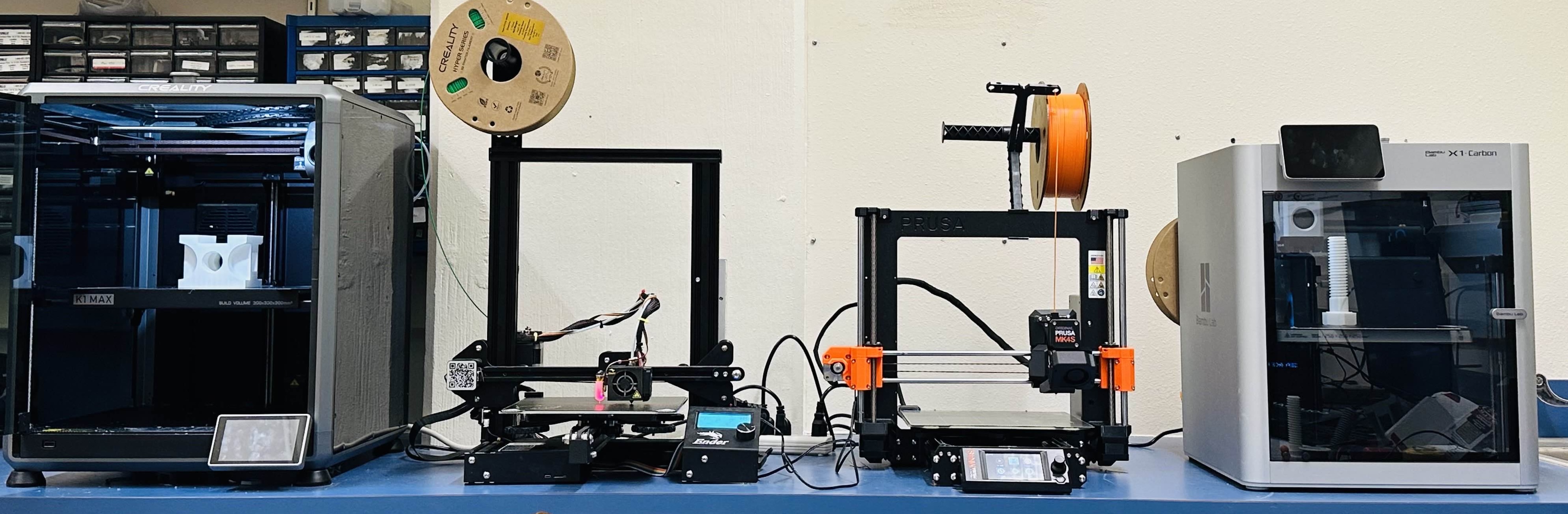

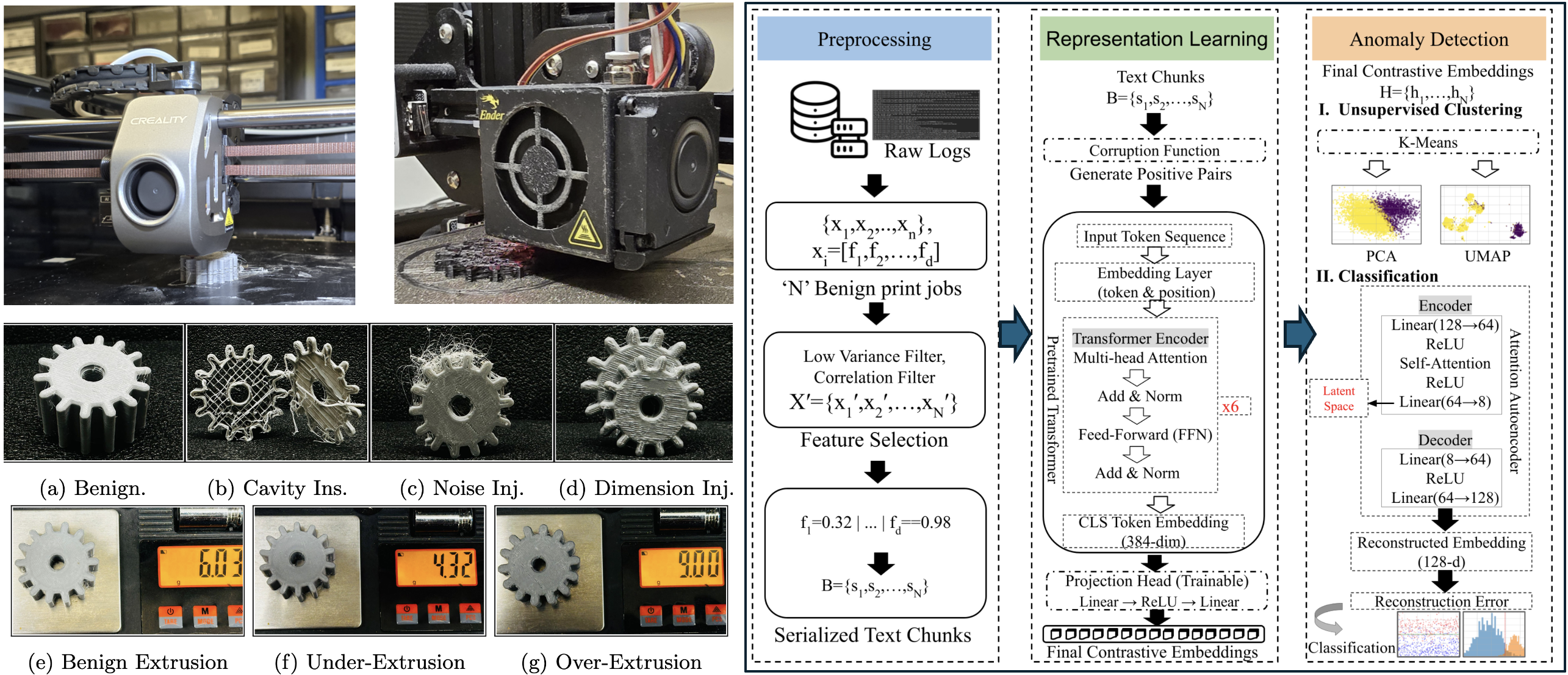

The convergence of AI and security in cyber-physical systems is creating powerful new opportunities to enhance safety, reliability, and resilience in intelligent infrastructures. As CPS applications expand across manufacturing, transportation, and critical infrastructure, trustworthy AI capable of detecting anomalies, mitigating threats, and supporting human decision-making has become essential to ensuring safe and autonomous operation. Additive Manufacturing (AM), commonly known as 3D printing, has revolutionized modern manufacturing by enabling the rapid prototyping and production of complex components with minimal material waste. Its integration into safety-critical domains such as aerospace, healthcare, automotive, and defense has made the underlying infrastructure of AM systems a prime target for cyber-physical threats. With the proliferation of network-connected 3D printers, adversaries can exploit attack vectors across the digital thread from CAD design, STL/G-code translation, network communication, to firmware execution, potentially sabotaging the mechanical integrity, dimensional accuracy, or intellectual property (IP) of printed parts.

Vehicular Security and Driver Behavioral Abstraction

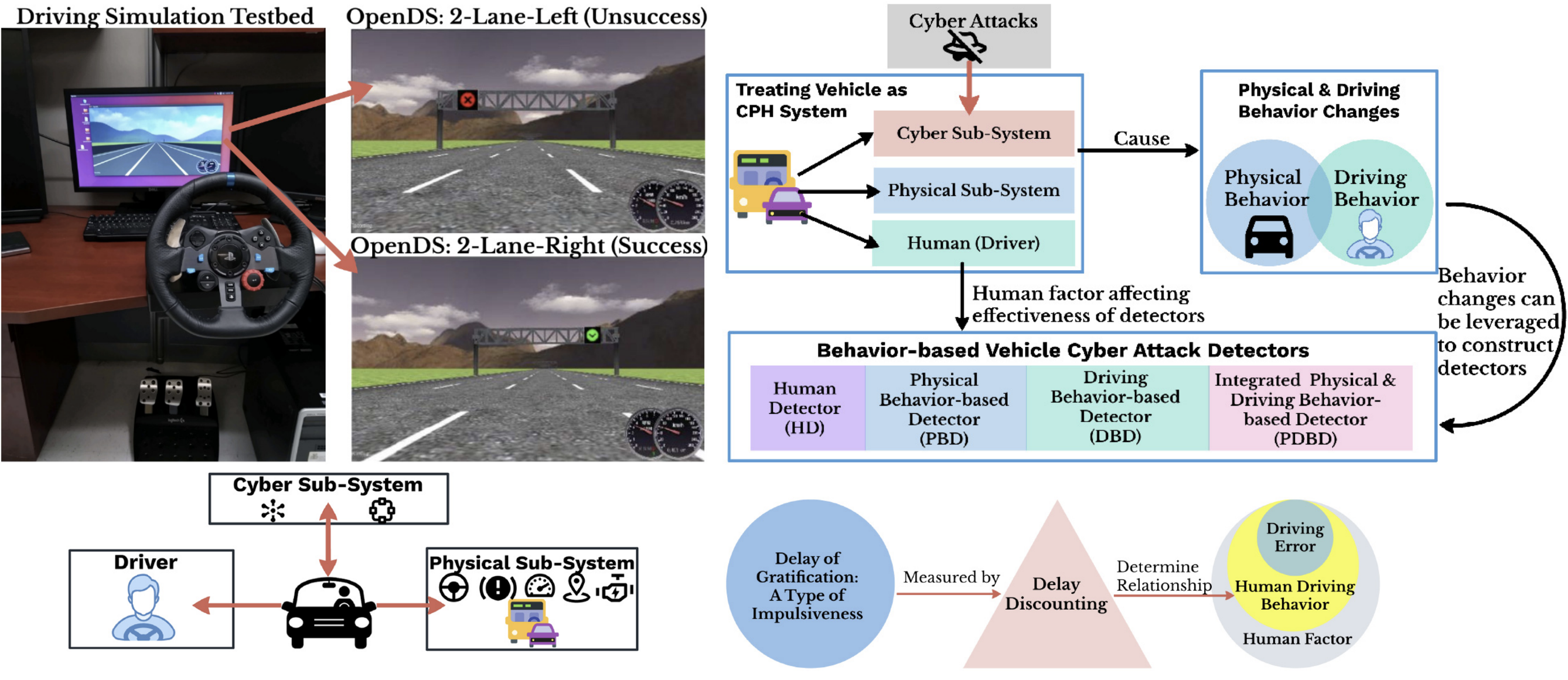

As increasingly more vehicles are connected to the Internet, cyber attacks against vehicles are becoming a real threat with devastating consequences. This highlights the importance of detecting vehicle cyber attacks before fatal accidents occur. One natural method for tackling this problem is to adapt existing approaches for detecting attacks in enterprize networks, but which has achieved limited success. We approach to treat vehicles as cyber–physical–human systems, leading to a novel framework called exploiting human, physical and driving behaviors to detect vehicle cyber attacks (ExHPD). The framework has four detectors: 1) a human detector; 2) a physical behavior-based detector; 3) a driving behavior-based detector (DBD); and 4) an integrated physical and DBD.

Human-Centered Multimodal AI for Assistive Perception

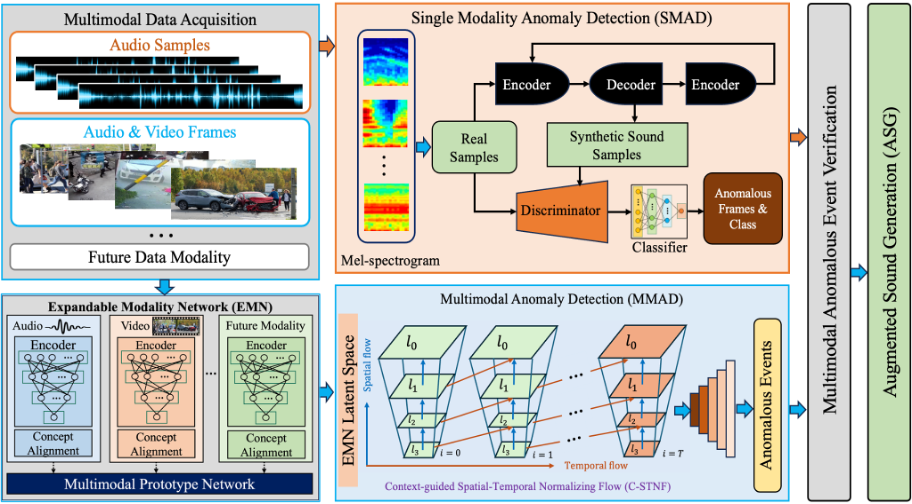

A number of research thrust in the AIVS Lab focuses on multimodal, agentic AI systems that enhance human capability and support individuals in safety-critical or perception-limited environments. In the SoundEYE project, an NSF-funded collaboration with San Francisco State University, we are developing an AI-augmented perceptual system for visually impaired individuals. SoundEYE constructs audio-spatial representations of the environment and delivers natural sound-based feedback to guide user perception and navigation. Beyond its immediate societal impact, the project advances our broader vision of human-centered AI, where multimodal perception, contextual reasoning, and adaptive feedback extend human abilities rather than merely replicating machine intelligence. In AVSafe project, we are developing a cloud-edge, adaptive multimodal AI framework to enhance driver awareness and ensure robust vehicular safety. AVSafe integrates multimodal cues and cognitive modeling to support driver-critical interventions. By incorporating natural sound augmentation as a complementary sensory channel, the system facilitates rapid situational understanding and improves driver response in unforeseen or emergency scenarios.

Spiking RL for Neuromorphic Learning

As computing systems approach the physical limits of silicon scaling and as computation becomes a defining bottleneck for future AI, we are developing efficient, energy-aware computing architectures for edge intelligence. Through this exploratory project, we are investigating neuromorphic and FPGA-based analog architectures capable of implementing reinforcement-learning agents for continuous-control tasks in resource-constrained environments. This research complements our AI-CPS focus by addressing a central challenge in embedded intelligent systems: enabling low-power, high-efficiency computation at the network edge. In our ongoing work on analog memristor-based learning, we explore hybrid models that combine analog device physics with deep learning to realize near-sensor intelligence. Our long-term objective is to design reconfigurable architectures that support real-time perception and decision-making in autonomous drones, vehicles, and manufacturing robots.

Micro-Expression Analysis for Data-Informed STEM Education

A growing component of my research focuses on advancing human-centered AI for teaching and learning, particularly through the development of micro-expression–based learning analytics. We are developing an AI-driven Micro-Expression Analysis System (MEAS) designed to improve teaching and learning in STEM classrooms. MEAS uses computer vision and deep learning to detect subtle facial-expression cues that signal student engagement, confusion, or cognitive difficulty in real time.